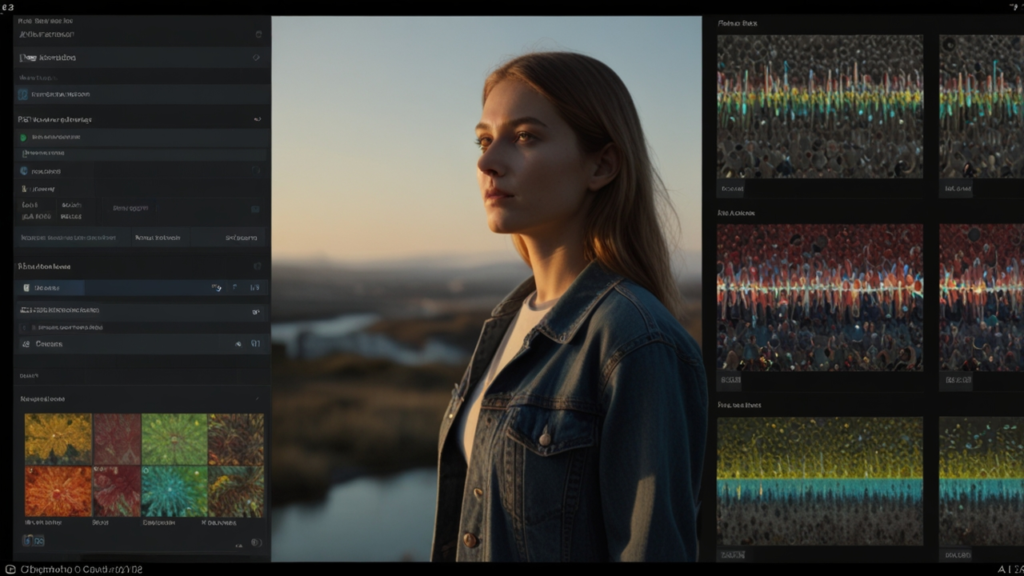

Image Generator 3 Revolutionary Techniques

Welcome to our deep dive into the world of artificial intelligence in visual creation. In this article, you will discover how sophisticated tools are reshaping the way images are produced and creatively enhanced. We explore historical breakthroughs, emerging trends, and real-world applications in a friendly and accessible manner.

This detailed guide is structured to help you understand the evolution and future potential of the leading imaging technology. Whether you are new to the topic or already familiar with it, our engaging discussion will offer valuable insights. You are invited to explore the fascinating journey of technological creativity.

As you read on, consider how these innovative methods resonate with your own experiences in digital design and creative technology. Feel free to leave comments, share thoughts, or reach out for more information along the way.

Table of Contents

- Introduction to Image Generator

- Evolution and History of Image Generator

- How AI visual creator Enhances Image Generator

- picture synthesis algorithm Systems and Their Applications

- Real-World Case Studies of Image Generator

- automated art creator in Modern Image Generator Solutions

- Future Trends: digital image synthesizer and Beyond

Introduction to Image Generator

Core Principles and Functionality Artificial Intelligence

The concept of a progressive visual creation tool has been in development since the early days of digital image processing. The journey started in the 1960s when simple algorithms were introduced to alter and enhance images. As computing power increased and machine learning models emerged, the integration of intelligent features allowed these systems to produce ever more realistic images.

Innovations in underlying computational methods led to breakthroughs in 2014 with the introduction of Generative Adversarial Networks (GANs). This milestone revolutionized the field with its two-network design, where one network generates images and the other evaluates them. Such a system provided an effective way to produce near-photorealistic visuals.

Have you ever wondered how these early algorithms paved the way for today’s advanced systems?

Overview of Capabilities and Impact

The abilities of this innovative tool extend far beyond simple image creation. It integrates advanced models that adjust to user inputs, synthesizing images that adhere to various styles and specifications. These functionalities have democratized creative processes, enabling professionals and hobbyists alike to generate compelling visuals with minimal technical know-how.

Through iterative advancements and continuous research, this technology has empowered industries ranging from advertising to education. It supports rapid prototyping, customized aesthetics for branding, and even entire art installations. The impact of this creative technology is now felt globally as more sectors adopt these solutions.

In your experience, how critical do you find the role of such tools in transforming creative workflows?

Evolution and History of Image Generator

Historical Milestones and Breakthroughs Automation Technologies

The evolution of visual synthesis began in the 1960s with rudimentary digital image processing techniques. Early milestones involved the experimentation with simple filters and basic algorithms. Progress was gradual due to limited computational capacities and scarce data availability.

A significant breakthrough occurred in 2014 with the development of GANs by Ian Goodfellow and colleagues. GANs introduced a competitive framework between two neural networks—a generator and a discriminator—leading to the creation of synthetic images that were nearly indistinguishable from real photographs.

This transformative approach was further bolstered in 2009 when Stanford introduced the ImageNet dataset, which provided over 14 million labeled images. This critical resource accelerated advances in computer vision and image synthesis, a fact recognized in numerous academic studies available on detailed history of image synthesis.

Do you think earlier computational limitations hindered experiments in digital visual art?

Global Contributions and Open-Source Movements

Over the decades, contributions to this technology have come from all corners of the globe. The Americas and Europe have been pivotal, contributing foundational research and open-source projects, including platforms like OpenAI’s DALL·E and Stability AI’s Stable Diffusion. On the other side, regions like Asia, specifically Japan and South Korea, have embraced the technology, integrating unique datasets and adaptation methods to suit local industries such as manga and K-pop visuals.

Australia has also played a role by focusing on ethical frameworks and educational integration, ensuring responsible use of these advanced systems. The blend of global perspectives has fostered a thriving ecosystem for innovation. For detailed timelines, you can explore insights on AI timeline overview.

How do you view the influence of global collaboration on technological progress?

How AI visual creator Enhances Image Generator

Integration of Diffusion Models for Enhanced Detail Innovative Solutions

The integration of diffusion models marks a recent leap in visual synthesis. These models work by transforming random noise into high-quality images via iterative denoising. Essentially, the system learns to reverse the addition of noise, allowing it to generate highly detailed imagery. This approach significantly improves the diversity and realism of the visuals produced by the system.

Studies have shown that diffusion models can accurately recreate intricate details, making them ideal for generating complex scenes. For instance, platforms like Stable Diffusion, MidJourney, and DALL·E have implemented these models with impressive efficiency. Technical descriptions and further research can be verified from a detailed guide on image generation models.

Does the shift toward diffusion models change your perspective on creative image synthesis?

Refinement Through Text-to-Image Synthesis

The advent of text-to-image synthesis has revolutionized creative inputs into image production. Through this method, users convert simple text prompts into visual content by defining desired characteristics. Advanced models such as DALL·E and MidJourney use complex algorithms that closely match user intent with the generated images.

This synthesis process leverages prompt engineering to ensure that the final output aligns well with the provided textual description. Over time, refinements in this technology have made it possible for even novice users to produce high-quality visuals, ensuring accurate representation of creative ideas. Detailed analyses on such integrations are described in articles like those on generative AI timelines.

Are you intrigued by the capability to create images just from descriptive text?

picture synthesis algorithm Systems and Their Applications

Style Transfer and Customization Techniques Cutting-Edge Technologies

Style transfer techniques play an integral role in adapting existing images to new artistic styles. By applying the aesthetics of famous artworks or popular visual motifs, these algorithms can transform photographs into visually stunning masterpieces. Popular applications include digital art apps like Prisma and DeepArt, which have brought these techniques to the mass market.

This method is especially popular in Asia, where there is a cultural emphasis on distinctive art styles such as manga and K-pop visuals. The ability to mix and merge styles has opened up endless creative possibilities, encouraging novel explorations in visual design.

Do you feel that style transfer could redefine how we understand originality in digital art?

Efficiency With Variational Autoencoders (VAEs)

Alongside more visible methods, Variational Autoencoders (VAEs) contribute to efficient data representation and image generation. Although less prominent than GANs or diffusion models, VAEs excel in providing a probabilistic framework that aids in synthesizing images with lower computational demands. They are often applied within academic research, signaling their importance in theoretical explorations and niche applications.

Some platforms implement VAEs to improve efficiency, particularly during the early stages of image synthesis where speed is critical. This technology is frequently discussed in scholarly journals and can be explored further through resources like evolution of AI images.

What role do you think efficiency plays in balancing creative quality and speed?

Real-World Case Studies of Image Generator

High-Profile Art and Commercial Applications Digital Transformation

A notable example of real-world application is the portrait “Edmond de Belamy,” an artwork generated by AI that fetched $432,000 at Christie’s auction in 2018. This sale not only validated the technology but also underscored its potential in mainstream art. Major brands such as Coca-Cola and Nike now employ these systems for rapid visual prototyping and tailored campaign designs.

The usage extends into the entertainment sector, where innovative companies leverage these methods to create immersive visuals and interactive experiences. Many success stories demonstrate that these technologies are not merely theoretical but are actively reshaping advertising, entertainment, and even educational content—with verifiable data published in multiple market studies.

Have you witnessed the transformative power of art created by technology?

Comparison of Case Study Implementations

The following table provides a comparative overview of several notable case studies that have successfully adopted these visual synthesis tools. This summary highlights the inspiration, application impact, and regional contributions of each case.

Comprehensive Comparison of Case Studies

| Example | Inspiration | Application/Impact | Region |

|---|---|---|---|

| Edmond de Belamy | GANs Breakthrough | Art auction success ($432K sale) | Global |

| MidJourney | Iterative Design | 10M+ registered users | Global |

| DALL·E 2 | Text-to-Image Synthesis | Over 2M images generated daily | US/Europe |

| Stable Diffusion | Diffusion Model | Enhanced detail in creative work | Rapid Asia Adoption |

| Prisma | Style Transfer | Popular app for art transformations | Asia/Global |

How might these case studies inspire new avenues for your own creative ventures?

automated art creator in Modern Image Generator Solutions

Integration in Design and Advertising

In today’s commercial landscape, automated creative systems have found a home in the design and advertising sectors. These systems allow brands to rapidly prototype visuals and deliver tailored content to diverse markets. With increased efficiency and consistent quality, companies can maintain competitive advantages and stay agile amid market shifts.

Case studies have revealed that leading brands like Coca-Cola and Nike have significantly benefited from rapid image synthesis, allowing them to create multiple iterations on the fly. The incorporation of these systems has been validated in market analyses that emphasize improved creative output and reduced production time.

How could an agile design process transform your view of brand storytelling?

User Accessibility and Educational Integration

Beyond commercial applications, these creative systems are transforming educational models. In regions such as Australia and Europe, visual synthesis tools have been integrated into digital literacy curricula. This exposure ensures that students can learn and master the fundamentals of cutting-edge technology from an early age.

The educational benefits are multifold: improved creative thinking, a better grasp of technological advancements, and enhanced problem-solving abilities. With initiatives taking root in classrooms, users from all backgrounds can access tools previously reserved for experts.

Do you think educational integration of these systems can spark a new generation of creative technologists?

Future Trends: digital image synthesizer and Beyond

Technological Advancements and Predictive Models

Looking ahead, researchers predict that improvements in precision and control will further enhance these imaging systems. The next wave involves integrating three-dimensional modeling and video synthesis, which will open up new multimedia content creation opportunities. The capacity to generate intricate scenes and multi-dimensional narratives is already being explored in experimental projects.

Continued research promises to bring features such as perfect prompt interpretation and real-time video generation. These advances are anticipated to elevate the creative process to entirely new dimensions, impacting industries from film to interactive media. Academic sources confirm that these enhancements are backed by ongoing trials and technical validations.

Are you excited about the future capabilities that could revolutionize creative production?

Ethical Considerations and Regulatory Developments

As the power of these systems grows, so too does the need for robust ethical frameworks and regulatory guidelines. Recently, regions like the US and EU have begun implementing stricter regulations concerning dataset transparency and copyright. Ensuring fair consent and ethical data usage remains a crucial aspect of development.

Moreover, global industries are working together to establish guidelines that balance rapid innovation with cultural preservation. These measures are essential to prevent misuse while enabling continued creative breakthroughs. Such discussions are supported by various research articles on regulatory trends in AI art.

What are your thoughts on balancing innovation with ethical responsibility?

Exclusive Insights from Image Generator: A Creative Journey

This section offers a unique perspective that synthesizes the remarkable advances in visual creativity. Over decades, relentless innovation has paved the way for dynamic systems that render imaginative visuals with precision and flair. This narrative reflects on intriguing historical breakthroughs and the human ingenuity that continues to propel this field forward. Vibrant examples from renowned art auctions and groundbreaking experiments highlight how visual artistry has expanded into new dimensions.

Some transformative initiatives have sparked conversations in global forums, inspiring collaborations across varied industries. In a world of rapid evolution, experimental techniques have redefined traditional boundaries and challenged established norms. Pioneering researchers and artists now harness these novel systems to create works that captivate audiences, pushing the limits of conventional art. The journey described here is not just technical but soulful, underscoring an ever-evolving relationship between creativity and technology. As the landscape shifts, fresh ideas emerge, transporting us to uncharted creative realms. This reflective exploration encourages the reader to ponder the limitless potential of future visual narratives while embracing the innovative spirit that today’s advancements instill.

This inspiring overview invites you to embark on a journey of discovery, provoking thoughtful insights into the mechanisms behind emerging creative technologies. Let the boundless creativity depicted in this narrative ignite your curiosity and inspire new imaginaries as you prepare to explore the conclusion.

FAQ

What defines the evolution of modern image synthesis?

The evolution is defined by successive breakthroughs from early digital image processing to the development of advanced neural networks such as GANs, diffusion models, and text-to-image systems. These developments have gradually shifted the landscape from simple filters to complex, interactive visual generation tools.

How do diffusion models work in generating detailed images?

Diffusion models convert random noise into highly detailed images by iteratively denoising data. They learn the reverse process of how noise is added to an image, enabling the generation of visuals that capture fine details and depth.

Why are ethical guidelines important for these systems?

Ethical guidelines ensure that data used for training such systems is obtained responsibly and that the rights of creators are respected. They help balance innovation with accountability, safeguarding against potential misuse while encouraging creative progress.

What role does text-to-image synthesis play in creative workflows?

Text-to-image synthesis allows users to input descriptive text and have the system generate images that closely reflect their intent. This process enables rapid prototyping and creative freedom while lowering the barrier to producing high-quality visuals.

How will future advancements impact the multimedia industry?

Future advancements, such as integration with 3D modeling and video synthesis, will revolutionize multimedia production by enabling the seamless creation of immersive, interactive content. This transformation holds promise for industries like film, virtual reality, and interactive design.

Conclusion

In summary, the journey through the evolution of visual synthesis has revealed transformative breakthroughs that continue to redefine creative expression. From early digital experiments to state-of-the-art systems like GANs, diffusion models, and text-to-image synthesis, innovation has been at the heart of this technology.

It is evident that these advanced systems are transforming how we approach design, advertising, and education by providing accessible and efficient tools that empower creativity. The future promises even more exciting developments, driven by technology that grows more sophisticated and ethically guided by research and regulations.

Your creative journey is just beginning—explore these innovations, and for more information, visit our AI & Automation category or contact us directly at Contact. Have you experienced similar leaps in creativity? Share your thoughts, and join the conversation!

Discover more from Fabelo.io

Subscribe to get the latest posts sent to your email.