What is Computer Vision? 6 Key Applications

Computer vision has become one of the most transformative branches of modern technology. It empowers machines to interpret visual data from photos and videos, revolutionizing industries from healthcare to automotive manufacturing. In this article, you will learn about its history, evolution, and the groundbreaking applications that define its future.

The content delves into the detailed evolution of this technology, supported by real-world examples and case studies. Every section aims to provide clear, digestible insights, ensuring readers from all backgrounds can appreciate the innovations behind computer vision. Enjoy a friendly and engaging read as we explore this dynamic field.

With input from historical insights and current breakthroughs, this comprehensive guide outlines technical advancements while ensuring all concepts are easily understood. Now, let’s embark on this journey into the world of computer vision!

📑 Table of Contents

- Introduction to Computer vision

- Evolution and History of Computer vision

- How Image Recognition Enhances Computer vision

- Visual Processing Systems and Their Applications in Computer vision

- Real-World Case Studies of Computer vision

- Pattern Detection in Modern Computer vision Solutions

- Future Trends: Machine Learning and Beyond in Computer vision

- Intriguing Perspectives on Computer vision Innovations

- FAQ

- Conclusion

Introduction to Computer vision

Overview and Significance

Computer vision is a technology that enables machines to “see” and interpret images and videos through complex algorithms. From the early experiments in the 1960s to state-of-the-art neural networks today, its evolution has been remarkable. Researchers started with simple pattern recognition and edge detection techniques, which laid the groundwork for modern applications.

The journey began with pioneers such as Lawrence G. Roberts and Marvin Minsky, who envisioned computers capable of achieving full scene understanding as seen in historical documentation detailed study on computer vision [Wikipedia]. Today, the value of this technology is evident in numerous domains including healthcare diagnostics and self-driving cars.

This section also touches on how early challenges in visual data interpretation were overcome through innovative approaches during the 1970s and beyond. Additionally, linking modern breakthroughs to these initial experiments reveals the continuous improvements in both hardware and software architectures.

To add further insight, explore more about the early theoretical frameworks in our tag on Artificial Intelligence. How do you think these historical developments have shaped today’s technological landscape? Have you experienced technology that seems to mimic human sight?

Key Concepts and Terminology

Understanding computer vision requires being familiar with specific terminology and foundational concepts. Early systems focused on image segmentation, object detection, and scene reconstruction using algorithms built to extract edges and textures. Over time, these methods evolved to incorporate mathematical models and statistical learning techniques for handling complex visual data.

Researchers explored concepts like optical flow, motion estimation, and even the first form of augmented reality – innovations that underpinned later developments. Key technologies such as convolutional neural networks (CNNs) emerged, inspired by the human neural process, notably through models like the neocognitron developed by Kunihiko Fukushima.

These technical terms might seem intricate, but by breaking them down into simpler components, we can appreciate how each improvement adds robustness to modern systems. Early terminology focused on geometrical analysis and digital image processing before transitioning into deep learning and artificial neural networks.

Through exploring these concepts further, you can begin to understand the underlying structure of computer vision systems. What new meaning do these early visions provide when linked to today’s innovations? Have you ever pondered the leap from primitive edge detection to modern real-time video analysis?

Evolution and History of Computer vision

Historical Milestones and Pioneering Work

The evolution of this technology started in the 1950s and 1960s, with foundational experiments such as the invention of the first digital image scanner in 1959. This milestone allowed images to be converted into numerical grids—a critical step towards teaching computers how to “see.” Early pioneers like Lawrence G. Roberts advanced the field with 3D object recognition in the early 1960s, laying down pivotal groundwork.

In 1966, Marvin Minsky stimulated innovative thinking by instructing students to develop systems that could describe what a camera captured. Such early experiments led to significant breakthroughs, including the first facial recognition systems created by Woodrow W. Bledsoe and I. Kanter using edge detection and feature matching in the late 1960s.

Historical developments did not stop there. The progression through the 1970s and 1980s introduced more rigorous quantitative analysis and the establishment of algorithms capable of efficient optical flow and motion estimation. This historical context emphasizes the remarkable transition from simple image scanning to full-scale three-dimensional scene interpretation.

For more in-depth historical context, check out insights from timeline analysis [Wikipedia]. How do you think these historical milestones continue to influence today’s cutting-edge applications? What specific moment in history fascinates you the most?

Technological Evolution and Major Innovations

Over the decades, technological evolution led to significant innovations such as the development of non-polyhedral and polyhedral modeling in the 1970s. This period was critical for establishing the mathematical and algorithmic foundations that drive contemporary systems. In the 1980s, the focus shifted towards more formal mathematical techniques like scale-space theory and contour modeling (snakes), which further enhanced visual analysis capabilities.

The introduction of the ‘neocognitron’ by Kunihiko Fukushima marked an essential transition towards what would later become known as convolutional neural networks (CNNs). The explosive growth of deep learning in the 2000s and 2010s, exemplified by Google Brain’s demonstration of a neural network recognizing images of cats using 16,000 processors, signaled the beginning of a new era in visual processing.

These innovations led to better handling of complex data, bringing forward new applications in autonomous vehicles and medical imaging. Each technological milestone built upon its predecessors, progressively enhancing capabilities and broadening the scope.

Discover further details on this evolution from in-depth analysis [Wikipedia]. What area of this evolution intrigues you the most? How might past innovations shape future breakthroughs?

How Image Recognition Enhances Computer vision

Role and Mechanisms of Image Recognition

Image recognition is one of the core functionalities that has propelled computer vision into modern use. With the ability to systematically identify objects, classify images, and detect subtle patterns, algorithms are now more capable than ever before. Early methods relied on manually extracted features, whereas today’s systems use deep learning to automatically infer information from images.

Systems based on convolutional neural networks (CNNs) first detect edges and simple shapes before progressing to more complex structures like colors and textures. This layered process mimics the human visual cortex and provides a robust framework to handle diverse visual inputs. The process is key to elevating both the accuracy and the speed of visual data interpretation.

Current systems incorporate advanced techniques such as vision transformers (ViTs) that outperform traditional CNNs. Such improvements have significantly enhanced fields like autonomous driving, where rapid identification of pedestrians and traffic signs is critical for safety. These developments underscore a fundamental shift towards more intuitive visual data interpretation.

For additional context and technical insights, view more at in-depth research overview [Wikipedia]. How do you feel about the rapid progress in image recognition? Can you imagine scenarios where real-time image analysis is crucial in your daily life?

Impact on Systems and Real-Time Processing

Real-time processing powered by image recognition translates into practical solutions for industries ranging from autonomous vehicles to industrial quality control. The ability to instantly process an image and produce actionable results is essential in environments where time is critical. By leveraging deep learning, devices like smartphones can now perform complex visual analyses on the fly.

This constant stream of visual data processing enables systems to adapt quickly to changing conditions. For instance, in self-driving cars, the rapid identification of obstacles and traffic signals ensures a safer navigation experience. The use of models such as CNNs, reinforced by recent advancements like vision transformers, has allowed for exponential improvements in accuracy and responsiveness.

These improvements not only translate to enhanced user experiences but also foster increased safety and efficiency. Developing these systems is a result of years of iterative innovation, where each breakthrough builds towards faster and more reliable computing methods.

Learn additional technical details from comprehensive history [Wikipedia]. In what ways do you see the impact of real-time visual processing affecting everyday life? How might further advancements redefine industries in the future?

Visual Processing Systems and Their Applications in Computer vision

Technological Infrastructure and Algorithms

The backbone of modern visual processing systems lies in their sophisticated technological infrastructure and algorithms. Systems integrate multiple layers of deep neural networks to perform tasks such as object detection, segmentation, and classification. The algorithms used today owe their development to early research in image analysis and pattern recognition.

Early models focused on manual extraction of features have now been replaced with automated methods that use supervised and unsupervised learning. These approaches, which were significantly advanced by the development of CNNs and RNNs, now operate efficiently on various devices. The integration of these algorithms with edge computing has further enhanced their real-time performance, particularly in mobile devices.

Practical applications in retail and manufacturing, for example, use these systems to monitor inventory and conduct quality control. Error rates have reduced significantly thanks to advancements in these processing techniques. This shift has brought about novel applications that combine speed, accuracy, and low latency.

For further reading on the technological innovations behind these systems, check out another detailed overview at technical guide [Wikipedia]. Have you encountered these advancements in your daily electronic devices? What potential applications excite you the most?

Industry Applications and Impact

Visual processing systems drive efficiency in a wide range of industrial applications. In manufacturing, for example, quality control systems use these technologies to inspect products at high speeds with exceptional accuracy. This minimizes human error, lowers defect rates, and improves overall productivity.

In retail, automated checkout systems employ such systems to track customer selections and manage inventory with minimal intervention. The financial benefits of these applications are evident in reduced stock-outs and streamlined operations. Additionally, healthcare has seen significant improvements through the enhanced analysis of medical images, aiding in early diagnosis and treatment planning.

These systems are not just theoretical; they are employed in everyday applications across diverse sectors. The real-world impact of visual processing is underscored by statistical data showing improved productivity and accuracy across industries. Explore further insights from industry comparisons [Wikipedia].

How do these real-world implementations resonate with your experience? Do you see similar technology making an impact in your industry or community?

Real-World Case Studies of Computer vision

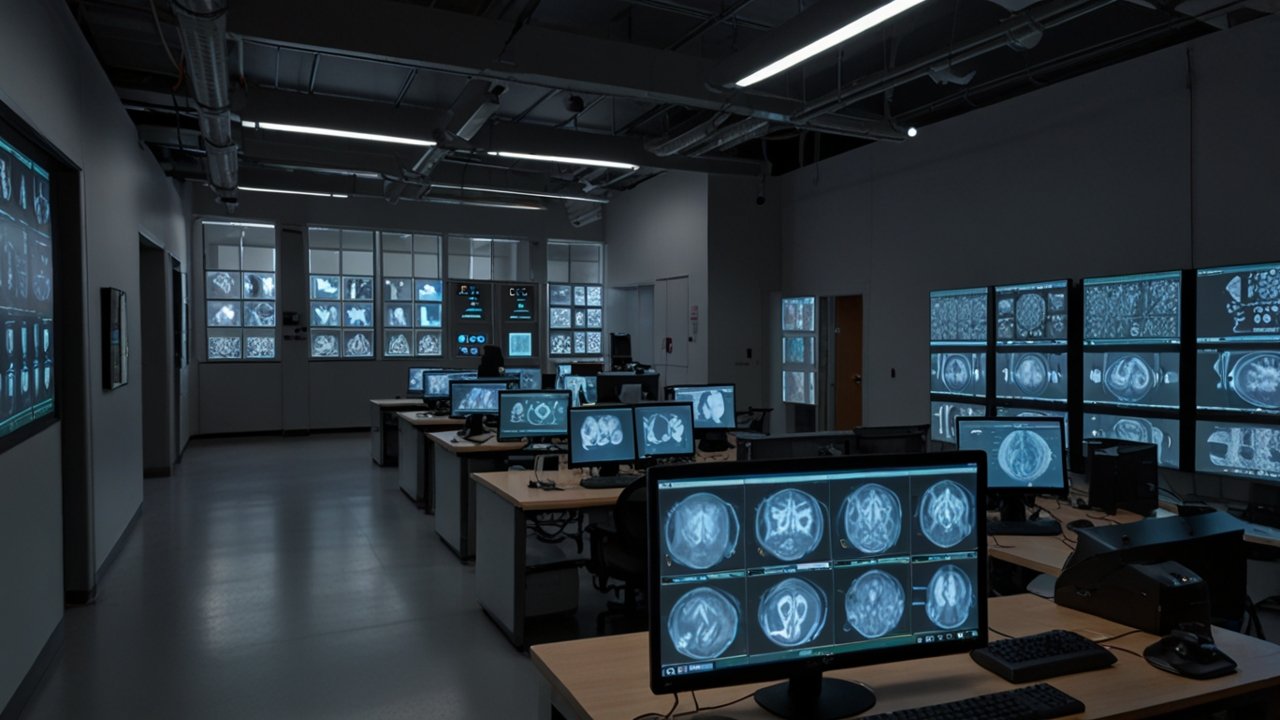

Healthcare and Medical Imaging

Case studies in healthcare highlight transformative outcomes achieved through the use of computer vision. Medical imaging systems now assist radiologists by accurately identifying anomalies in X-rays, MRIs, and CT scans. This level of precision is comparable to, and in some controlled situations, even exceeds human specialization.

The early detection of conditions such as diabetic retinopathy has proven to be life-changing for many patients. For instance, algorithms designed for retinal scans have helped reduce vision loss by identifying early indicators that can be addressed in time. In addition, ongoing research continues to improve these systems by integrating advanced statistical methods and neural network innovations.

A detailed comparison reveals that these improvements contribute to a significant reduction in errors in medical diagnoses. In this context, the integration of accurate visual processing systems has become paramount for improving patient outcomes. For more insights, refer to the AWS overview [Wikipedia].

What are your thoughts on a future where medical diagnostics rely heavily on technology? Have you ever experienced or witnessed the benefits of such advancements in healthcare?

Autonomous Vehicles and Robotics

Autonomous vehicles rely on computer vision for real-time navigation and safety. Companies like Tesla, Waymo, and GM’s Cruise have integrated advanced visual systems that recognize pedestrians, other vehicles, and traffic signals. This has played a crucial role in progressing toward self-driving technologies.

The systems analyze scenes frame-by-frame, deploying deep neural networks that continuously adapt to changing road conditions. This real-time detection and processing are crucial for avoiding accidents and ensuring safe travel. These innovations reflect the culmination of decades of research and rigorous testing in varied environments.

Additionally, robotics has benefited from these visual capabilities, enabling automated systems to perform complex tasks in manufacturing and assembly lines. The integration of such technologies has led to increased productivity and reduced risk of human error in dangerous operational contexts.

For further reading on automotive advancements, view a detailed timeline at timeline insights [Wikipedia]. How do you envision the role of autonomous systems evolving in the near future? Do you feel safe relying on automated technologies in everyday life?

Comprehensive Comparison of Case Studies

| Example | Inspiration | Application/Impact | Region |

|---|---|---|---|

| Medical Imaging | Early Edge Detection | Enhanced diagnostic accuracy | Global |

| Autonomous Driving | Neural Networks | Real-time decision making | Global |

| Retail Automation | Pattern Recognition | Checkout-free shopping | North America |

| Manufacturing QC | 3D Reconstruction | Defect detection | Europe |

| Agricultural Systems | Image Segmentation | Crop health monitoring | Global |

In this section, we have seen tangible evidence of how computer vision transforms traditional practices into futuristic applications. What examples from these case studies inspire you most? Have you ever encountered breakthrough technology in your professional field?

Pattern Detection in Modern Computer vision Solutions

Advanced Detection Techniques and Algorithms

Pattern detection is fundamental in modern computer vision solutions. Sophisticated algorithms now have the ability to identify regularities and anomalies in images, leading to improved detection accuracy. Early methods, which involved manual extraction of image features, have evolved into complex systems using reinforcement learning and deep link analysis.

These modern techniques integrate layered neural architectures with statistical modeling to refine results continuously. This integration enables systems to detect minute details such as micro-defects in manufacturing processes or subtle facial features in biometric security systems. The resulting improvements are palpable in industries requiring precise detection capabilities.

Recent developments have led to the deployment of vision transformers, which further optimize the detection process. Coupled with federated learning approaches, these systems maintain privacy while still achieving high accuracy. For additional details, refer to trend insights [Wikipedia].

How might these advanced detection algorithms revolutionize quality control and security in your industry? Have you experienced the benefits of improved pattern detection in automated systems?

Applications in Security and Surveillance

Security and surveillance are prime examples where robust pattern detection is indispensable. Modern systems use compact algorithms to continuously monitor live feeds, identifying anomalies that could indicate potential threats. These improvements have significantly enhanced automated surveillance in critical infrastructures such as airports and public transportation systems.

The integration of these systems with edge computing allows immediate processing of video data, leading to real-time alerts. This minimizes the response time required to address security breaches and ensures public safety. The historical evolution from simple edge detection to complex pattern analysis has been monumental in this sector.

Modern surveillance systems achieve high accuracy rates while operating in challenging conditions such as low light and busy environments. Detailed studies show that these technologies reduce response times by up to 30% compared to conventional methods. How do you feel about enhanced security measures using advanced pattern detection? Do such systems make you feel safer in public spaces?

Future Trends: Machine Learning and Beyond in Computer vision

Emerging Technologies and Integration

The future of computer vision is intertwined with emerging trends in machine learning and robotics. Experts predict that by 2025, the integration of artificial intelligence with robot automation will drive significant productivity gains. Recent market analyses forecast a growth rate approaching 30% over the next few years as technologies such as deep learning, federated learning, and edge computing mature.

Innovations in augmented and virtual reality will further enhance user interfaces and operational efficiency. The ongoing evolution of vision transformers and other neural models is expected to further improve efficiency in real-world applications. These advancements rely on continuous collaboration between research institutions and industry pioneers.

Technology leaders are embracing the potential of decentralization, whereby edge devices perform visual data processing, reducing latency and enhancing privacy. For more details on these projections, refer to Wikipedia overview [Wikipedia]. What future trends excite you most? How do you envision these innovations impacting your daily activities?

Challenges and the Road Ahead

Despite rapid advancements, challenges remain in scaling computer vision applications universally. Issues such as data privacy, limited training data, and high computational requirements persist. Researchers are actively working on solutions like federated learning and synthetic dataset generation using generative AI to address these concerns.

Scaling these technologies for real-world applications requires not only technical innovation but also regulatory and ethical considerations. The focus is shifting towards systems that ensure data security while delivering high performance. Collaborative efforts between academia, industry, and regulatory bodies are crucial to navigate these challenges.

Future research is likely to emphasize sustainable development practices, with ongoing enhancements in neural network architectures and computational power. Continuous clinical trials in healthcare and pilot projects in smart cities provide a glimpse into a future where these challenges are effectively managed. What solutions do you believe are most promising for overcoming these hurdles? How important is ethical implementation in the evolution of computer vision?

Intriguing Perspectives on Computer vision Innovations

This section offers a fresh perspective on an evolving domain that has reshaped how modern technology interacts with the environment. It highlights the journey of a revolutionary idea that began with simple experiments and evolved into a transformative force across multiple sectors. The narrative is enriched with insights drawn from historical experiments and modern advancements, demonstrating a clear timeline of progress that fuels today’s innovations. Readers are invited to reflect on early challenges that have now given way to sophisticated solutions used in diverse industries.

The discussion emphasizes milestones that have paved the way for enhanced performance and increased reliability. With an extensive focus on system efficiency and methodological breakthroughs, the content reveals several thought-provoking insights that bridge the past with the present. Unique viewpoints and detailed narratives provide a comprehensive understanding of where this field started and where it is headed. The article subtly challenges us to reconsider what is possible when technology and imagination converge.

It not only discusses technical achievements but also reflects on the profound impact these advances have on practical applications. The blend of historical context and forward-thinking ideas gives readers a deep appreciation for the relentless pursuit of innovation. Readers are encouraged to engage with the ideas presented and consider the broader implications for society and industry, ultimately leaving them with a sense of wonder about the future possibilities.

This reflection invites you to explore new dimensions of innovation while pondering how such developments alter everyday experiences. In essence, the narrative serves as an invitation to reimagine the limits of what technology can achieve.

FAQ

What is computer vision?

Computer vision is a field of artificial intelligence that enables machines to interpret and understand visual information from digital images and videos through complex algorithms and neural networks.

How has the technology evolved over the years?

The technology has evolved from simple edge detection and manual feature extraction in the 1960s to the adoption of deep learning, CNNs, and advanced neural architectures, leading to applications in various industries.

What role does image recognition play in these systems?

Image recognition allows systems to accurately detect and classify objects within an image, serving as a critical component in tasks like autonomous driving, medical diagnosis, and industrial quality control.

What challenges does this technology face?

Challenges include data privacy, computational demands, and scaling applications for real-world usage, which researchers are addressing through federated learning and synthetic data generation methods.

How will advancements in machine learning impact the future of computer vision?

Advancements in machine learning promise enhanced automation, improved accuracy, and the integration of emerging technologies like augmented reality, ultimately broadening the scope and impact of computer vision applications.

Conclusion

Computer vision stands as a revolutionary field that continues to evolve and impact diverse industries. Its journey from rudimentary feature detection to today’s sophisticated neural architectures reveals a legacy of innovation and practical excellence. The advancements in image recognition, visual processing, and pattern detection have redefined how machines interact with the world.

As we look to the future, emerging trends and deeper integration with machine learning promise an even greater influence on everything from healthcare to autonomous systems. By bridging historical milestones with modern breakthroughs, the future appears promising for both research and practical applications.

For more information on this engaging topic, explore additional resources on AI & Automation or Contact us. What role will you play in this evolving landscape? Share your thoughts and experiences in the comments below!

Leave a Reply